A Tech win by my own assessment.

–rare email feedback, with highlighting by me. It is clear o me the Hongkong OMS interviewer gave the most direct (negative) feedback. I feel grateful for that.

I feel this was a technical win. I think only the Hongkong OMS interviewer found some weakness in me.

I feel perm role selection/screening was more stringent than contractors because of leadership, communication, personality match, ownership… The new permanent hire is often seen as a potential manager to join the “race”.

In contrast, contractors are mostly assessed for technical + basic communication/attitude.

In SG, more than half the time I was assessed as a dev lead (or architect) but I don’t want to. U.S. contract market is better.

As he interviewed till the end, I’d like to share a bit of feedback.

The interviewers believed he had good industry experience (low latency, low-level coding, memory usage, CPU usage, MSA) and can implement/maintain systems, and appreciated his honesty e.g. not hiding the fact that he didn’t perform performance testing.

However, the interviewers wished to see more leadership and communication skills cognizant of 15+ years experience, and to conduct his own tests rather than easily take others’ assumptions at face value.

— Tokyo interviewer

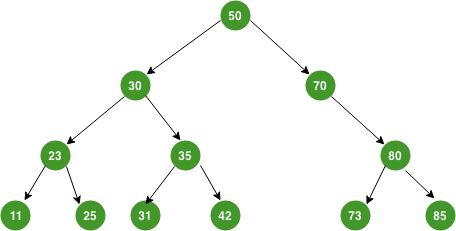

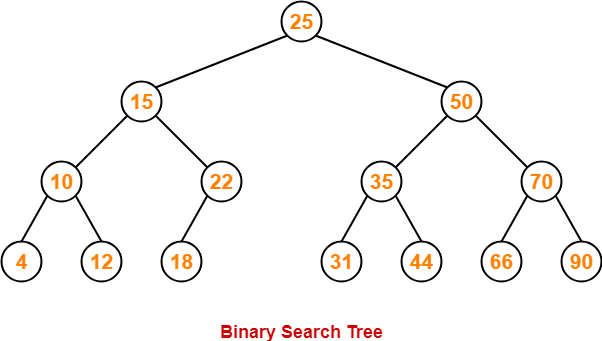

Given a BST, how to do you insert, look-up by key?

Lastly, how do you remove an arbitrary branch node? Need a deterministic algorithm to reshuffle affected subtree and keep the BST property. Considering the python coding rounds, this is about the hardest pure-algo question throughout the interview process.

- This level of difficulty is very different from tech shops

- This level of difficulty is much lower than the coreJava QQ questions

— QQ interviewer Ming

Q: suppose you find your prod log file suddenly stops growing, what do you do?

%%A: check disk space; check DB query hung due to a pending transaction; check socket data input/output blocking

%%A: take thread dump, which is a nice JVM feature not available in c++ or other applications

Q: how did you tune your jvm?

Q2: how did you size the heap enough to ensure no jGC for entire day?

%%A: We estimated the high watermark (like 2GB) and configure my jvm with a heap bigger than that.

This technique is simple and crude but it usually works. I think interviewer may not be impressed but I was confident because … it works!

Q2b: but will that prevent GC? Do you know every GC must stop all application thread first? (typical QQ)

%%A: at least the app thread can run after the snapshot, during the actual collection, right? I assume the snapshot is quick.

Q: in java, even without any protection, reading a 32-bit int will give you either the before-value or the after-value, right?

%%A: Not in c++. See c++11 atomic{int}^AtomicInteger.java #Bool can be half-written

Q: beside speed, what are other benefits/advantages of lock-free compared to lock-based designs?

%%A: the unsuccessful thread can do something else before retrying, rather than block indefinitely

%%A: a blocked thread (due to lock contention) is likely context-switched off the CPU. All the CPU data caches would soon be flushed.

Q: single-producer, single-consumer … can you design an efficient data structure to share data between them

Now I think we only have one shared mutable variable — the producer’s moving pointer. Interviewer hinted that all we need is for the producer to notify consumer where the new position of the moving pointer, in the data structure. Perhaps we don’t need lockfree. Volatile is probably sufficient.

Aha — If the consumer thread needs to wait (not busy-wait), then notification is the key, so condVar is more suitable than lockfree. I need to be more familiar, more fluent with the fundamental constructs (thread class, mutex+condVar)

I tend to think of that moving pointer as an integer.

I asked interviewer: are these concurrency knowledge and skills needed in your projects?

Interviewer: “Your CV mentioned these skills“. I believe interviewer implicitly answered NO. Standard practice of verifying/scrutinizing claims on CV !

–This OMS interviewer was trying to break the candidate…

Q: describe CAS. I recalled the details in https://bintanvictor.wordpress.com/2018/04/05/cas-cpu-instruction-takes-3-inputs/..

Q: how many clock cycles for a CAS instruction?

See further details in toy^surgeon

Q: what’s your actual CAS usage in your project, not a textbook use case?

%%A: At citi-muni, I had two threads putting quotes on a lockfree queue or stack. Queue is natural. In hindsight, the stack can be useful — when readers want to process the latest quote first, LIFO.

Q: how would you design an order book, if you ignore your current system?

Q: why did you say array-based is faster than AVL?

%%A: most likely cache efficiency

Q: what’s the performance level of your order book? “I (the interviewer) would use array-based order book .. can easily achieve 1M msg/sec”

Q: what’s the disadvantage of an order book based on AVL tree?

— coding^QQ^non-tech questions

I think I did well on non-tech (A-) and coding (A), OK on QQ (B). With the non-technical, again, I feel interviewers can’t reach conclusions.

I believe the tough questions listed above are classic QQ i.e. theoretical knowledge not needed in real projects. Interviewers may consider it zbs but I would call it QQ.

Consistency and stability in high-end coreJava QQ topics — No real change since 2007

Do I look like Deepak? I think with algos I seldom feel fake and broken. As to the QQ topics if I am armed with a tidbit of c++ low-level knowledge then I can often defend myself, because on those questions, c++ is invariably more low-level than java. There are still some “weakness topics” where I may come across as … superficial+academic, like Deepak.

I generally concede to the interviewer, but not today during the “CAS justification” discussion.